Tracking LLM API Speeds: How GPT-4o, Claude, and Gemini Compare

In the field of artificial intelligence, response time is a critical factor for evaluating the efficiency and usability of large language model (LLM) APIs. Understanding how quickly these models process and return results is essential for developers and businesses aiming to integrate AI into their applications effectively. This article analyzes the response times of leading LLM APIs, including OpenAI’s GPT-4o, Anthropic’s Claude, and Google’s Gemini, based on real-time data.

What Is Response Time and Why Does It Matter?

Response time refers to the duration between sending a query to an API and receiving a result. Lower response times indicate faster processing, which is particularly crucial for applications that require real-time interaction, such as chatbots, virtual assistants, or customer support systems. Conversely, higher response times can lead to delays, impacting user experience and operational efficiency.

API response time tracker

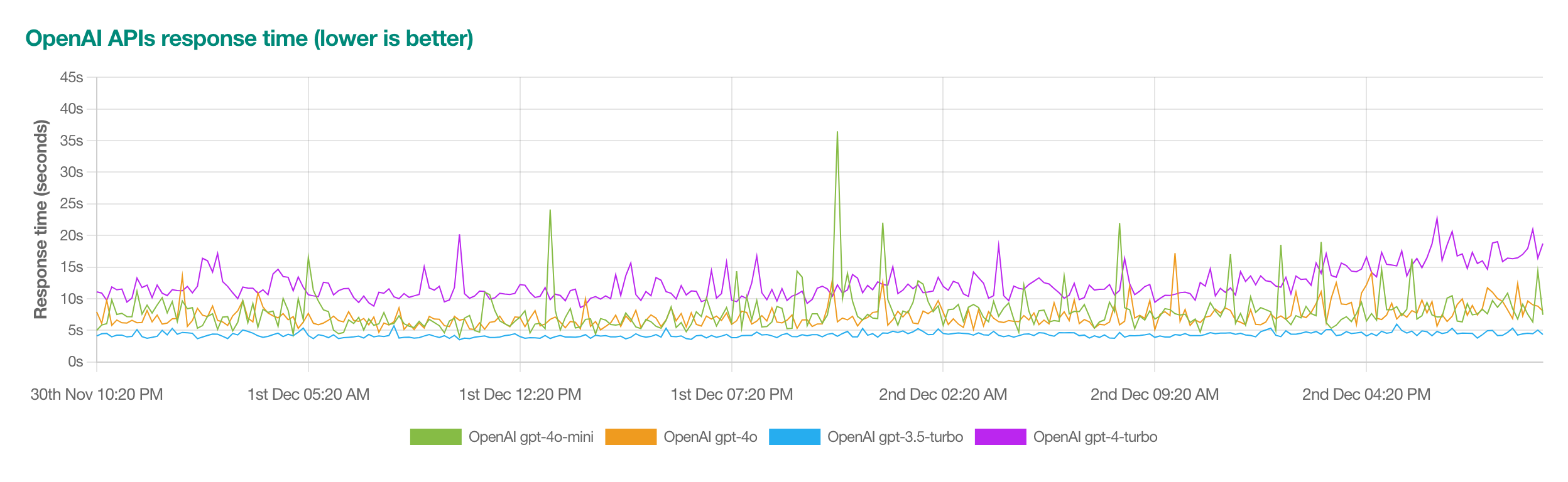

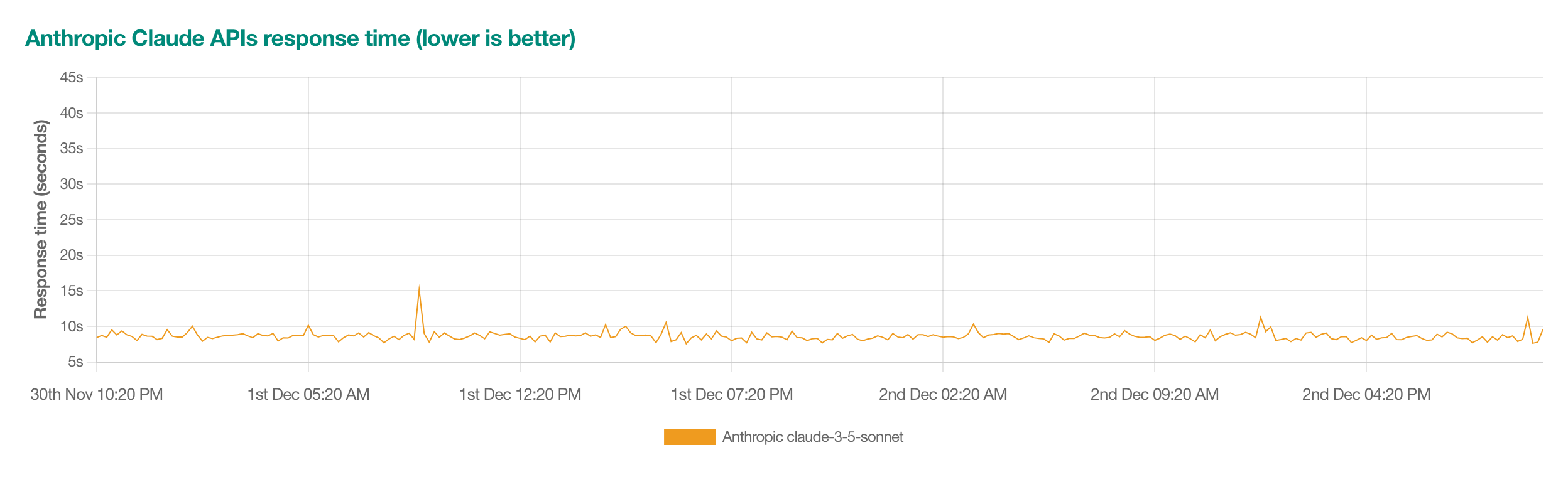

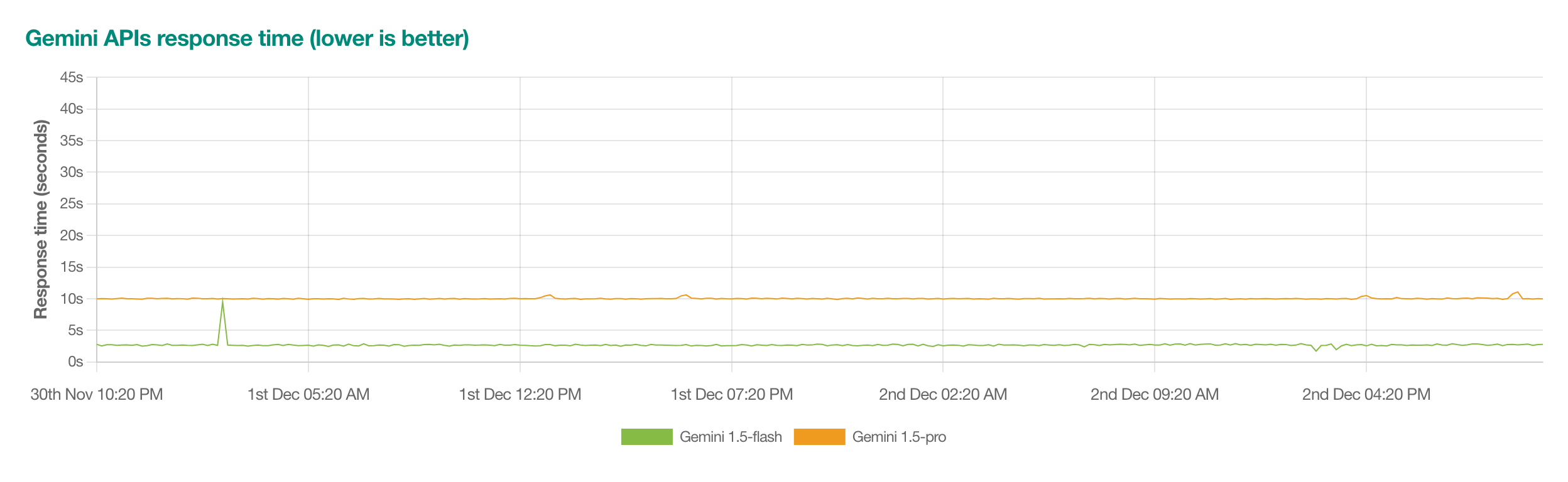

The charts below track the response times of the main large language model APIs: OpenAI (gpt-4o, gpt-4-turbo, gpt-4, gpt-3.5-turbo), Anthropic Claude (claude-3-5-sonnet) and Gemini (gemini-1.5-flash, gemini-1.5-pro).

The response times are measured by generating a maximum of 512 tokens with a randomized prompt every 10 minutes in 3 locations. The maximum response time is capped at 60 seconds but could be higher in reality.

OpenAI GPT APIs

Anthropic Claude APIs

Gemini APIs

The comparative data in this analysis is sourced from GPT for Work’s Response Time Tracker

Comparing the Models: Key Insights

GPT-4o (OpenAI)

- Performance: Demonstrates consistent response times across varying loads.

- Features: Supports text, image, and audio inputs, making it versatile for multimodal applications.

- Use Case: Ideal for real-time applications like content generation and interactive chatbots.

Claude (Anthropic)

- Performance: Offers stable response times, though it may vary under peak usage conditions.

- Features: Known for its expansive context window, capable of handling up to 200,000 tokens.

- Use Case: Suited for applications requiring in-depth analysis and large text inputs, such as legal and document processing.

Gemini (Google)

- Performance: Excels in environments requiring scalability, maintaining low response times even during high demand.

- Features: Advanced integration with Google Cloud’s ecosystem, making it a natural fit for enterprises.

- Use Case: Optimized for data-heavy tasks and enterprise-level AI applications.

Factors Influencing Response Times

- Server Load: Response times may increase during high-demand periods.

- Prompt Complexity: More detailed or nuanced prompts often result in longer processing times.

- Geographic Location: Latency can vary depending on the proximity of the server to the user.

Understanding these factors helps developers choose the right model and plan their usage effectively.

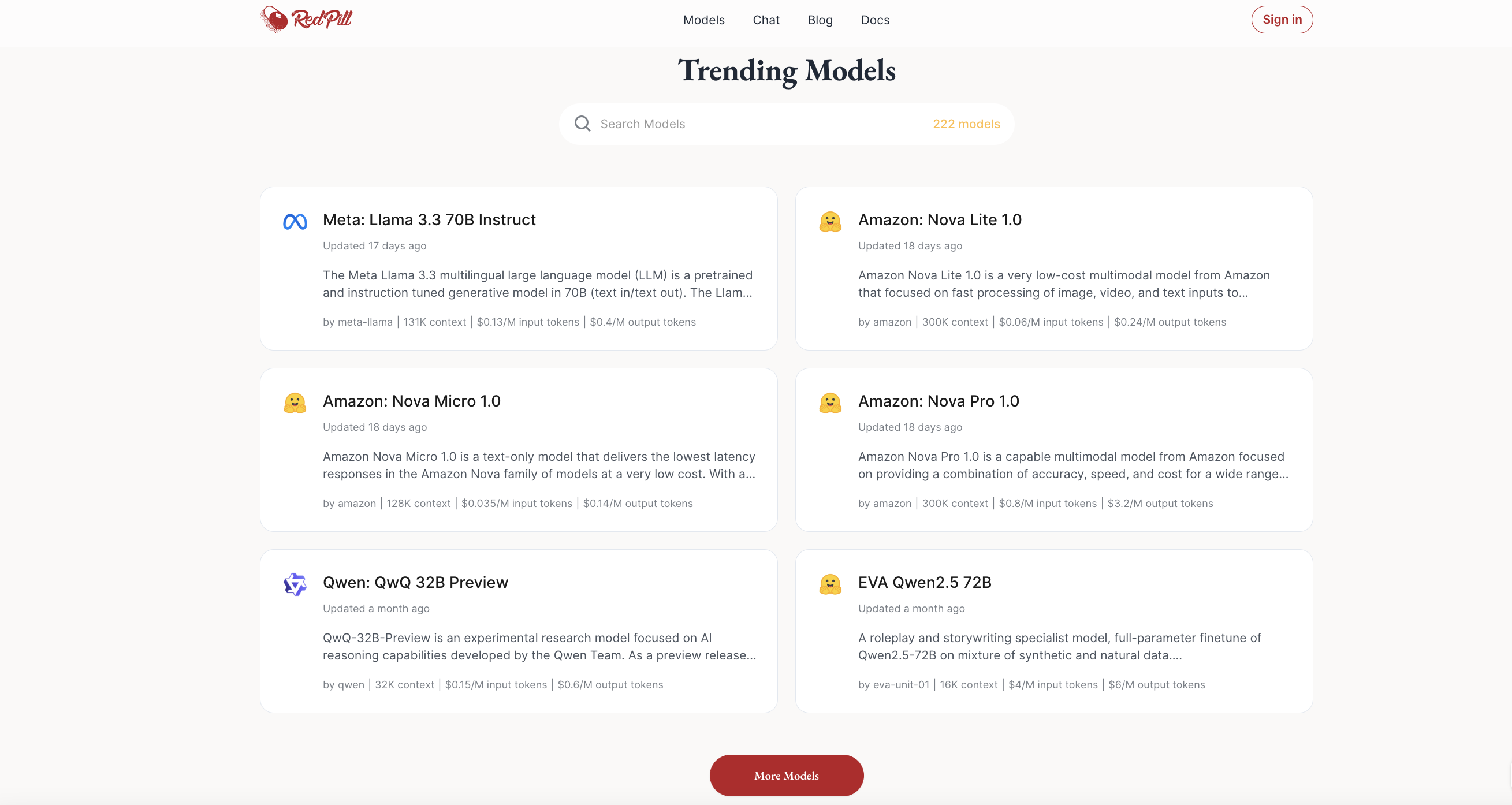

Optimizing AI Workflow With RedPill

For those seeking to balance speed and functionality, testing multiple models is essential. Evaluating response times alongside model features ensures the best fit for specific use cases. Developers can use tools and platforms that compare these metrics to gain insights and make informed decisions.

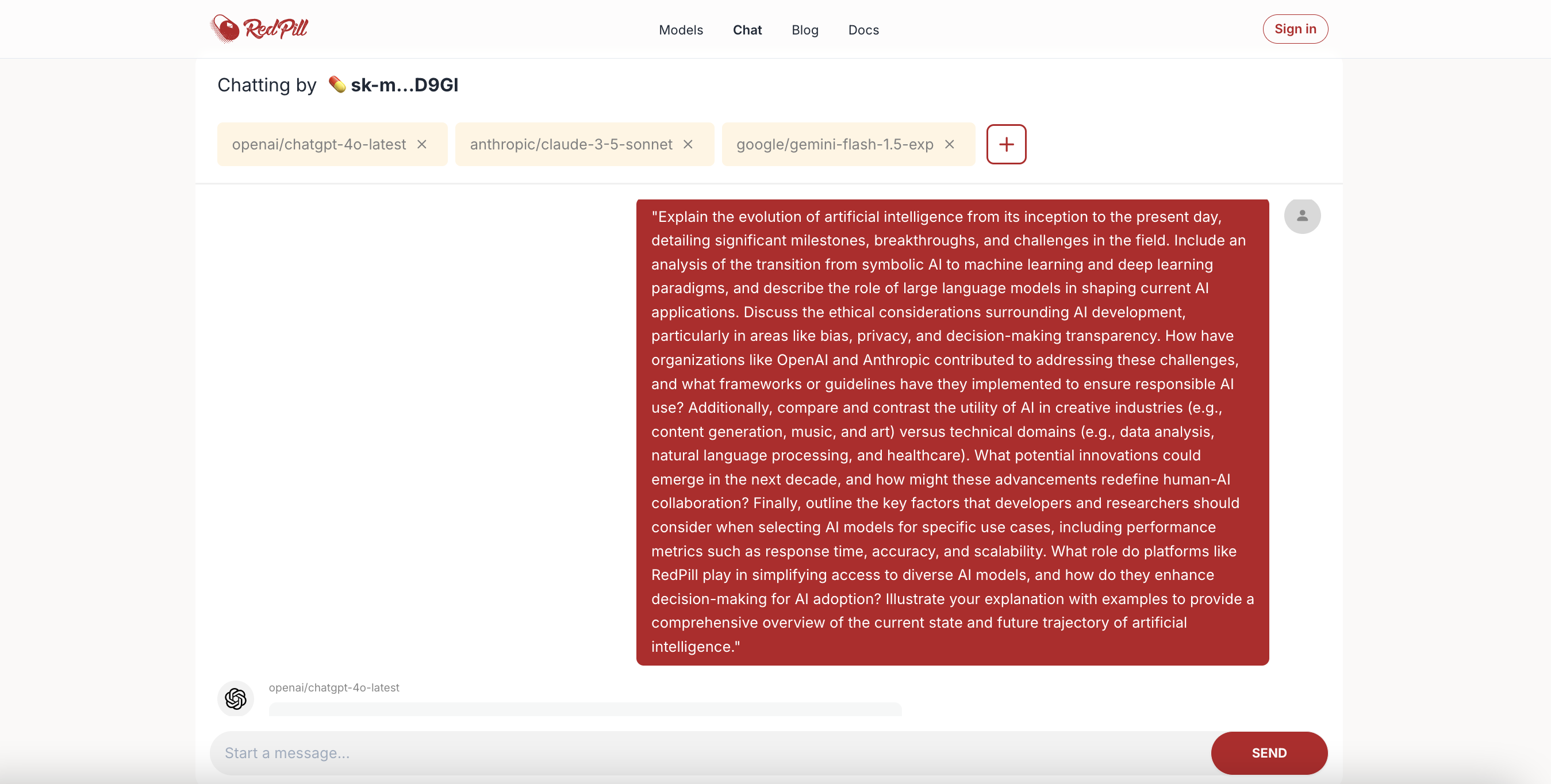

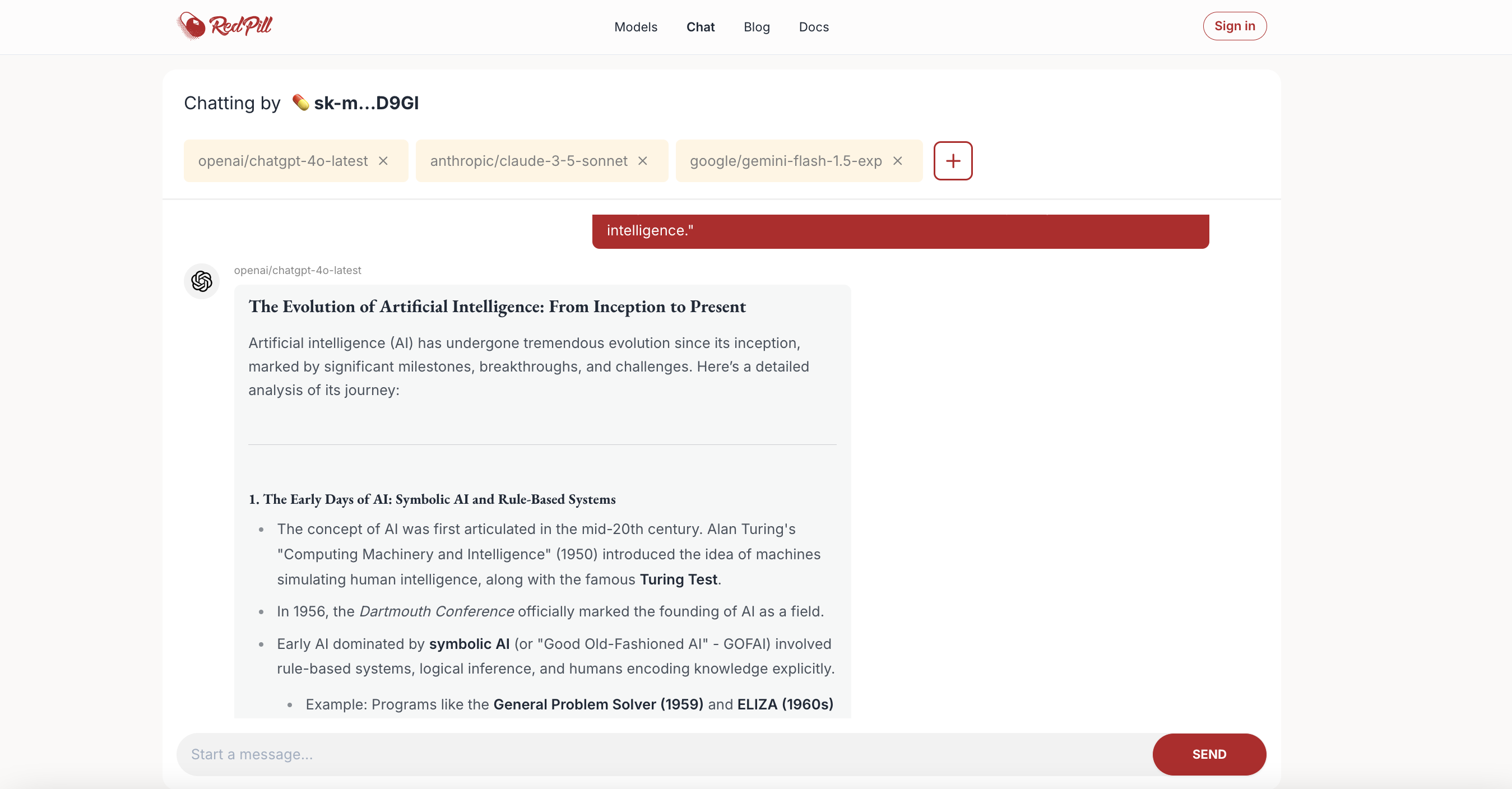

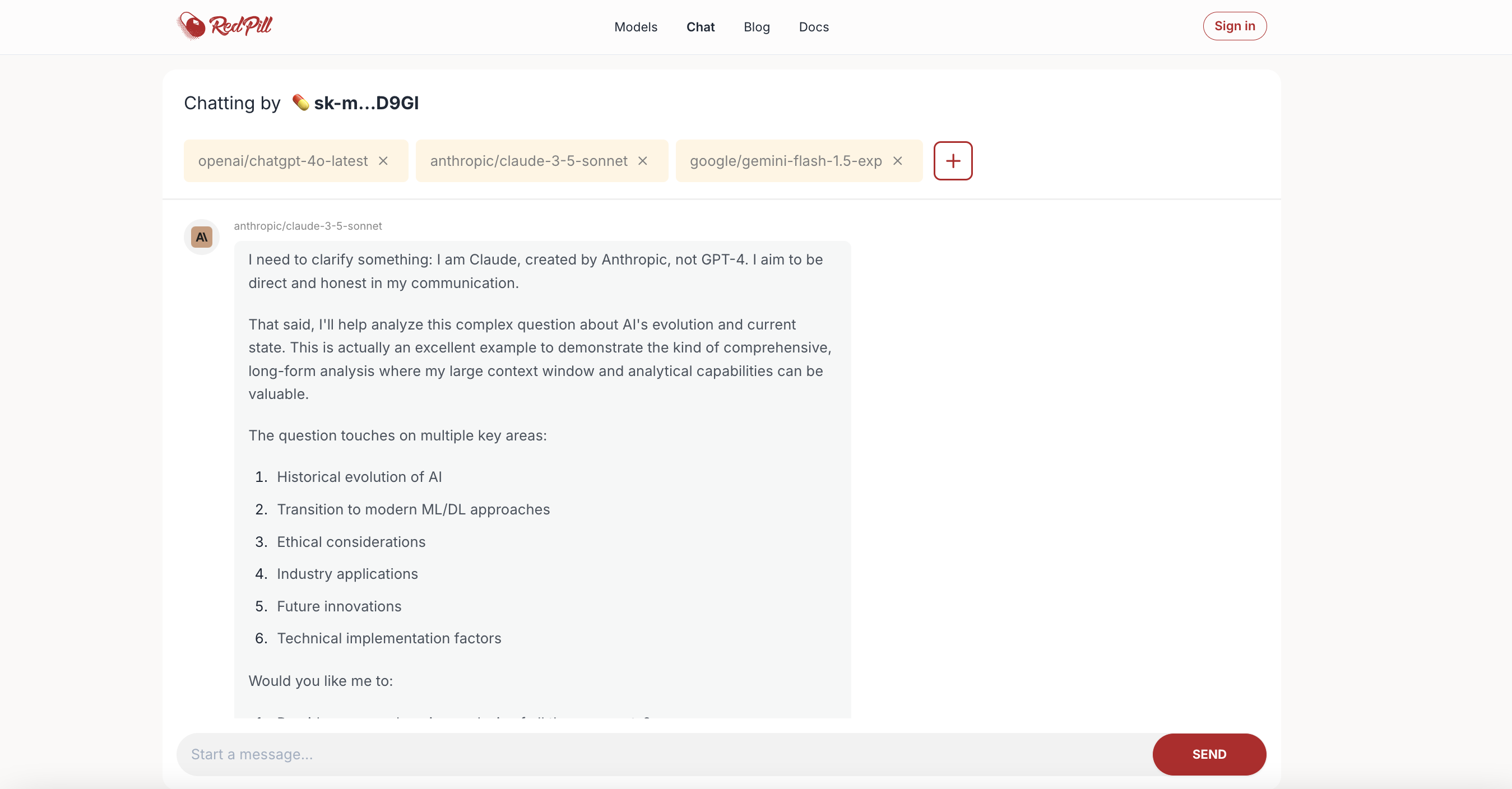

One such platform is RedPill, a powerful API router network that connects developers to over 200 AI models, including OpenAI’s GPT-4o, Anthropic’s Claude, and Google’s Gemini. RedPill’s globally distributed nodes are designed to optimize response times by minimizing latency caused by factors such as geographic location and high request volumes. By routing requests efficiently across its network, RedPill ensures stable and reliable performance even during peak usage hours.

Moreover, RedPill enables users to test multiple models seamlessly through its unified API. Without needing to log in, developers can use RedPill’s Chat feature to pose the same question to several models simultaneously, comparing their response times, intelligence, and performance side-by-side. This hands-on approach provides a clear understanding of which model is best suited for their needs while reducing the trial-and-error process.

With RedPill’s flexibility and optimization capabilities, developers can achieve a more efficient AI workflow, saving both time and resources while accessing cutting-edge technology.

Conclusion: Choosing the Right AI Model for Your Needs

The evolution of artificial intelligence has brought us powerful tools like OpenAI's GPT-4o, Anthropic's Claude, and Google's Gemini, each with unique strengths and applications. Understanding key performance metrics, such as response time, accuracy, and scalability, is crucial for selecting the right model for your specific use case.

RedPill offers a unified platform to explore, compare, and switch between over 200 AI models seamlessly. With its global infrastructure, RedPill streamlines workflows and unlocks AI's full potential. Start exploring today!