How to use LLM APIs — OpenAI, Claude, Google

Integrating advanced AI models like OpenAI's GPT-4o, Anthropic's Claude 3.5 Sonnet, and Google's Gemini 1.5 Pro into your applications can significantly enhance their capabilities. This guide provides a comprehensive overview of accessing these models via their respective APIs, with a focus on the latest offerings.

Accessing OpenAI's GPT-4o

OpenAI's GPT-4o is accessible through the OpenAI API, offering advanced language understanding and generation capabilities. To integrate GPT-4o into your applications:

- Sign Up: Create an account on the OpenAI Platform.

- Obtain API Key: Navigate to the API section to generate a unique API key.

- Install OpenAI SDK: Use the OpenAI Python library for seamless integration:

pip install openai- Implement API Calls: Utilize the API key to make requests to GPT-4o:

import openai

openai.api_key = 'your-api-key'

response = openai.Completion.create(

engine='gpt-4o',

prompt='Your prompt here',

max_tokens=150

)

print(response.choices[0].text.strip())For detailed documentation, refer to the OpenAI API Reference.

Accessing Anthropic's Claude 3.5 Sonnet

Anthropic's Claude 3.5 Sonnet is their most advanced model, offering enhanced reasoning and coding capabilities. To integrate Claude 3.5 Sonnet:

- Sign Up: Register on the Anthropic Console.

- Obtain API Key: Generate an API key from your account dashboard.

- Install Anthropic SDK: Install the Anthropic Python library:

pip install anthropic- Implement API Calls: Use the API key to interact with Claude 3.5 Sonnet:

import anthropic

client = anthropic.Client(api_key='your-api-key')

response = client.completions.create(

model='claude-3.5-sonnet',

prompt='Your prompt here',

max_tokens=150

)

print(response.completion.strip())For more information, visit the Anthropic API Documentation.

Accessing Google's Gemini 1.5 Pro

Google's Gemini 1.5 Pro is a multimodal model optimized for a wide range of reasoning tasks. To integrate Gemini 1.5 Pro:

- Sign Up: Create an account on the Google Cloud Console.

- Enable Vertex AI: Activate the Vertex AI API in your project settings.

- Obtain API Key: Generate an API key from the API & Services section.

- Install Google Cloud SDK: Use the Google Cloud SDK for integration:

pip install google-cloud-aiplatform- Implement API Calls: Utilize the API key to make requests to Gemini 1.5 Pro:

from google.cloud import aiplatform

client = aiplatform.gapic.PredictionServiceClient(client_options={

'api_endpoint': 'us-central1-aiplatform.googleapis.com'

})

response = client.predict(

endpoint='projects/your-project/locations/us-central1/endpoints/your-endpoint',

instances=[{'content': 'Your prompt here'}]

)

print(response.predictions[0]['content'])For detailed documentation, refer to the Google Cloud Vertex AI Documentation.

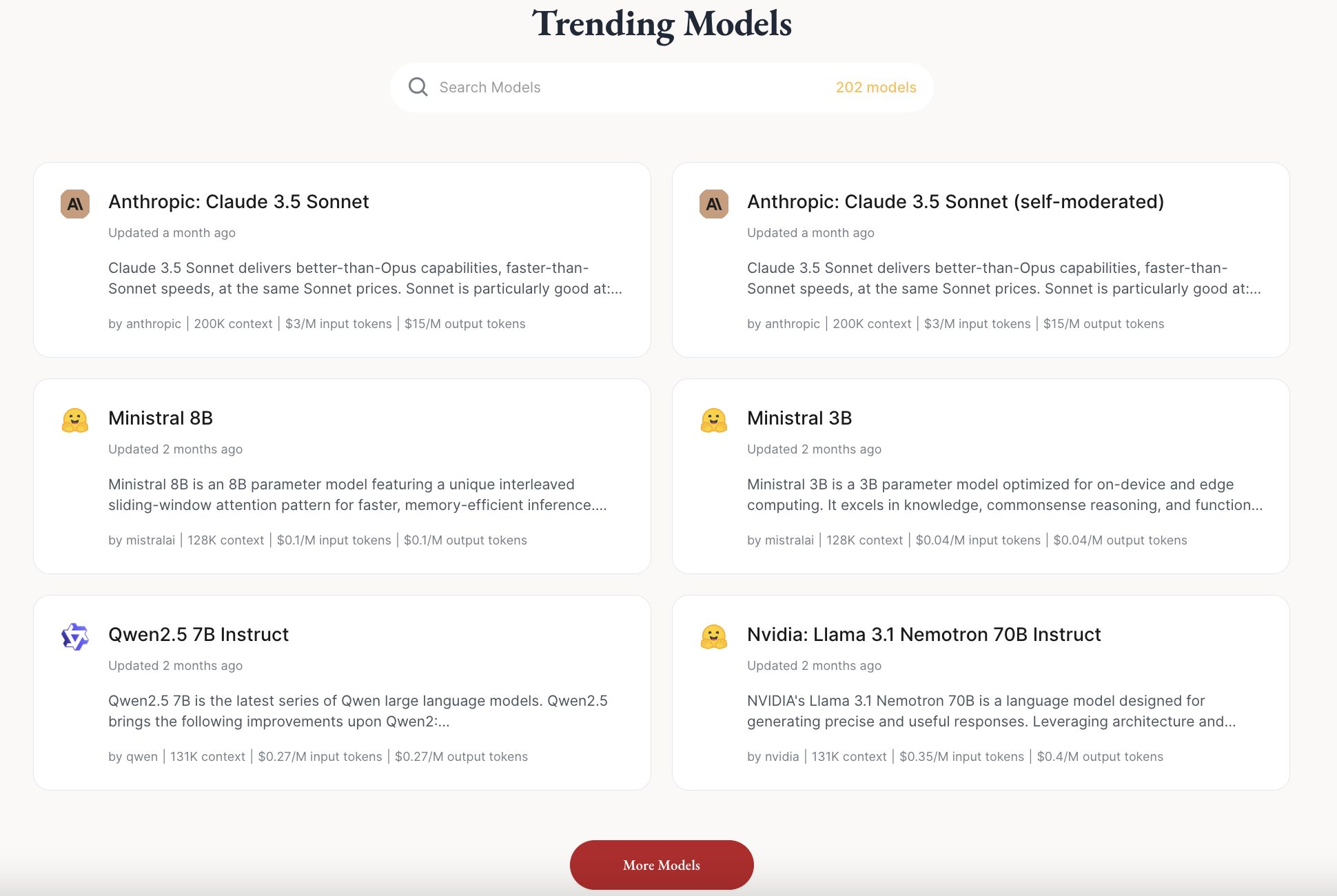

Introducing RedPill: Simplifying AI Model Integration

Managing multiple platforms for accessing various AI models can be complex and time-consuming. RedPill addresses this challenge by providing a unified platform that consolidates access to over 200 top AI models, including OpenAI's GPT-4o, Anthropic's Claude 3.5 Sonnet, Google's Gemini 1.5 Pro, Llama 2, and Mistral 7B.

Seamless API Calls Across Languages

The example demonstrates how developers can easily call the GPT-4o model using RedPill’s unified API:

1. JavaScript

For web-based applications, make a simple fetch call:

fetch("https://api.red-pill.ai/v1/chat/completions", {

method: "POST",

headers: {

"Authorization": "Bearer <YOUR-REDPILL-API-KEY>",

"Content-Type": "application/json"

},

body: JSON.stringify({

"model": "gpt-4o",

"messages": [

{

"role": "user",

"content": "What is the meaning of life?"

}

]

})

})

2. Python

Python developers can use the requests library for API calls:

import requests

import json

response = requests.post(

url="https://api.red-pill.ai/v1/chat/completions",

headers={"Authorization": "Bearer <YOUR-REDPILL-API-KEY>"},

data=json.dumps({

"model": "gpt-4o",

"messages": [

{

"role": "user",

"content": "What is the meaning of life?"

}

]

})

)

print(response.json())

3. Shell

For quick testing or integration in command-line tools:

curl https://api.red-pill.ai/v1/chat/completions \

-H "Content-Type: application/json" \

-H "Authorization: Bearer <YOUR-REDPILL-API-KEY>" \

-d '{

"model": "gpt-4o",

"messages": [

{"role": "user", "content": "What is the meaning of life?"}

]

}'

Effortless Model Switching with RedPill

Transitioning between models is straightforward with RedPill. For instance, to switch from GPT-4o to Claude 3.5 Sonnet, simply modify the model parameter in your API request:

import requests

import json

response = requests.post(

url="https://api.red-pill.ai/v1/chat/completions",

headers={"Authorization": "Bearer <YOUR-REDPILL-API-KEY>"},

data=json.dumps({

"model": "claude-3.5-sonnet",

"messages": [

{

"role": "user",

"content": "What is the meaning of life?"

}

]

})

)

print(response.json())By updating the "model" field to your desired model's name, you can seamlessly switch between different LLMs. Refer to RedPill’s Supported Models List for the complete catalog.

Key Benefits of RedPill:

- Single Platform Access: Eliminates the need for multiple registrations and API keys by offering a centralized interface to various models.

- Rapid Integration: Quickly access the latest models as they become available, ensuring your applications leverage cutting-edge AI technology.

- Cost-Effective: RedPill maintains pricing consistent with official sources and offers discounted credit packages, providing a more affordable solution.

RedPill makes accessing and utilizing the best AI models not only straightforward but also highly scalable for projects of any size. Whether you’re developing a web app, working on a backend system, or testing ideas in a shell script, RedPill’s API empowers you to do it all with ease.

For more information and to sign up, visit the RedPill Website.